Engineering

How will AI change the practice of engineering

Matt Welsh in a lecture at Harvard says

The field of Computer Science is headed for a major upheaval with the rise of large AI models, such as ChatGPT, that are capable of performing general-purpose reasoning and problem solving. We are headed for a future in which it will no longer be necessary to write computer programs. Rather, I believe that most software will eventually be replaced by AI models that, given an appropriate description of a task, will directly execute that task, without requiring the creation or maintenance of conventional software. In effect, large language models act as a virtual machine that is “programmed” in natural language. This talk will explore the implications of this prediction, drawing on recent research into the cognitive and task execution capabilities of large language models.

“The Model is the Computer” Sam Altman: “ChatGPT is an eBike for the mind”

How much more can these models scale? Current models are looking only at the tip of the iceberg of data on the internet.

See Using Fixie.AI

Seattle-based GitClear published examined four years of data of 150M lines of code and concluded that the faster code added thanks to AI may contribute to “churn”, and add to technical debt in the way that haphazard projects copy/pasted by lots of uncoordinated developers.

CodeGen raised $16M to develop agent-based code writing

Whereas Copilot, CodeWhisperer and others focus on code autocompletion, Codegen sees to “codebase-wide” issues like large migrations and refactoring (i.e. restructuring an app’s code without altering its functionality).

Matt Rickard How AI Changes Workflows

Maybe issue tracking comes before code in future DevOps platforms? Does the code need to be checked in?

UX Implications

Vishnu Menon gives his Language Model UXes in 2027:

- Chat consolidation

- Persistence across uses

- Universal access (multimodality)

- Commodified, local LLMs

- Dynamically Generated UI & Higher Level Prompting

- Proactive interactions

Arguments against AI Coding

Is it possible to program non-trivial applications and customize code without knowing much about programming? walks through an attempt to get newbies to write a Flappy Bird-style game using ChatGPT. Concludes that it’s very difficult.

And this great comment from miraculixx

This is because the use of (a programming) language has never been the real challenge of writing code. The real challenge is knowing what you want, and to be able to break it down in sufficient detail for the result to be useful, be that a game or some other applications. This process requires at least two traits: the ability to think in abstract terms and the ability to think logically. These two traits are very rare in people (and non existent in machines). For this reason alone there will always be some people who are better at producing code, and it does not matter what tool they use. At best ChatGPT & co. are tools that make these people more productive

See also Edsger Dykstra’s essay On the foolishness of “natural language programming”

Github commissioned a study that estimates these tools could save developers up to 30% of their time, which could lead to an economic impact of $1.3 trillion in the United States alone.

and StackOverflow usage has plunged from 14 to 10M page views since Nov 2022.

The Rise of the AI Engineer nice Substack writeup of the differences between AI Engineering and machine learning.

Implications for Software Engineering

One comparison between people with and without access to ChatGPT showed a 50% efficiency advantage with the technology.

On the other hand, a Dec 2022 Stanford study concludes that people who use AI Code Assistants write less secure code.

Patterns for LLM-based Products

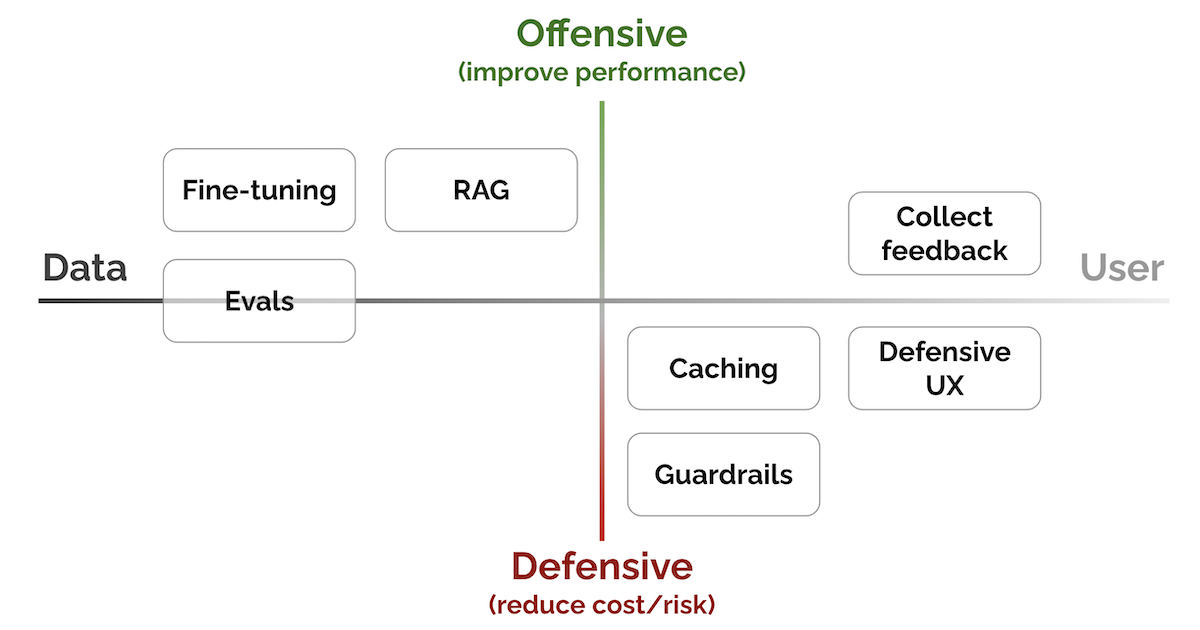

Eugene Yan has a lengthy breakdown of Patterns for Building LLM-based Systems & Products

- Evals: To measure performance

- RAG: To add recent, external knowledge

- Fine-tuning: To get better at specific tasks

- Caching: To reduce latency & cost

- Guardrails: To ensure output quality

- Defensive UX: To anticipate & manage errors gracefully

- Collect user feedback: To build our data flywheel

Building LLMs

A comprehensive guide to building RAG-based LLM applications for production.

Base LLMs (ex. Llama-2-70b, gpt-4, etc.) are only aware of the information that they’ve been trained on and will fall short when we require them to know information beyond that. Retrieval augmented generation (RAG) based LLM applications address this exact issue and extend the utility of LLMs to our specific data sources.

Miessler’s Proposed Architecture

Information security professional Daniel Miessler (ME-slur) proposes: SPQA: The AI-based Architecture That’ll Replace Most Existing Software