How Does it Work?

My explanation of how LLMs do what they do

How does an LLM-based generative system work?

Imagine you have access to a zillion documents, preferably curated in some way reassures you about their quality and consistency. Wikipedia, for example, or maybe Reddit and other posts that have been sufficiently up-voted. Maybe you also have a corpus of published articles and books from trustworthy sources.

It would be straightforward to tag all words in these documents with labels like “noun”, “verb”, “proper noun”, etc. Of course there would be lots of tricky edge cases, but a generation of spelling and grammar-checkers makes the task doable.

Now instead of organizing the dictionary by parts of speech, imagine your words are tagged semantically. A word like “queen”, for example, is broken into the labels “female” and “monarch”; change the label “female” to “male” and you have “king”. A word like “Starbucks” might include labels like “coffee”, as well as “retail store” or even “Fortune 500 business”. You can shift the meaning by changing the labels.

Generating a good semantic model like this would itself be a significant undertaking, but people have been working on this for a while, and various good “unsupervised” means have been developed that can do this fairly well.

Auto-completion is a simple form of this. With any sized corpus, you’ll know with reasonable probability the likelihood that a particular word will follow another word. Interestingly, you can do this in any human language without even knowing about that language – the probabilities of word order come automatically from the sample sentences you have from that language.

Now go a step above auto-completion and allow for completion at the sentence level, or even the paragraphs or chapters. Given a large enough corpus of quality sentences, you could probably guess with greater-than-chance probability the kinds of sentences and paragraphs that should follow a given set of sentences. Of course it won’t be perfect, but already you’d be getting an uncanny level of sophistication.

Pair this autocompletion capability with the work you’ve done with semantic labeling. And maybe go really big, and do this with even more meta-information you might have about each corpus. A Wikipedia entry, for example, knows that it’s about a person or a place. You know which entries link to one another. You know the same about Reddit, and about web pages. With enough training, you could probably get the computer to easily classify a given paragraph into various categories: this piece is fiction, that one is medical, here’s one that’s from a biography, etc., etc.

Once you have a model of relationships that can identify the type of content, you can go the other direction: given a few snippets of one known form of content (biography, medical, etc.), “auto-complete” with more content of the same kind.

This is an extremely simplified summary of what’s happening, but you can imagine how with some effort you could make this fairly sophisticated. In fact, at some level isn’t that what we humans are already doing. If your teacher or boss asks you to write a report about something, you are taking everything you’ve seen previously about the subject and generating more of it, preferably in a pattern that fits what the teacher or boss is expecting.

Some people are very good at this: take what you heard from various other sources and summarize it into a new format.

“List five things wrong with this business plan”, you don’t necessarily need to understand the contents. If you’re good enough at re-applying the patterns you’ve seen from similar projects, you’ll instinctively throw out a few tropes that have worked for you in the past. “The plan doesn’t say enough about the competition”, “the sales projections don’t take X and Y into account”, “How can you be sure you’ll be able to hire the right people”. There are thousands, maybe hundreds of thousands of books and articles that include these patterns, so you can imagine that with a little tuning a computer could do an exceptional job at this.

Wisdom of the Crowds

An LLM is sampling from an unimaginably complex mathematical model of the distribution of human words – essentially a wisdom of crowds effect that distills the collective output of humanity in a statistical way.

Where do you get the documents

OpenAI gets its documents from more than 200 million documents, 93% of which are in English, that are selected to be representative of a broad space of human knowledge.

Of course it starts with Wikipedia: almost 6 million articles.

One set of words comes from Common Crawl: a large, public-domain dataset of millions of web pages.

Another is a proprietary corpus called WebText2 of more than 8 million documents made by scraping particularly high-quality web documents, such as those that are highly-upranked on Reddit.

Two proprietary datasets, known as Books1 and Books2 contain tens of thousands of published books. These datasets include classic literature, such as works by Shakespeare, Jane Austen, and Charles Dickens, as well as modern works of fiction and non-fiction, such as the Harry Potter series, The Da Vinci Code, and The Hunger Games.1 There are also many other books on a variety of topics, including science, history, politics, and philosophy.

Also high on the list: b-ok.org No. 190, a notorious market for pirated e-books that has since been seized by the U.S. Justice Department. At least 27 other sites identified by the U.S. government as markets for piracy and counterfeits were present in the data set.

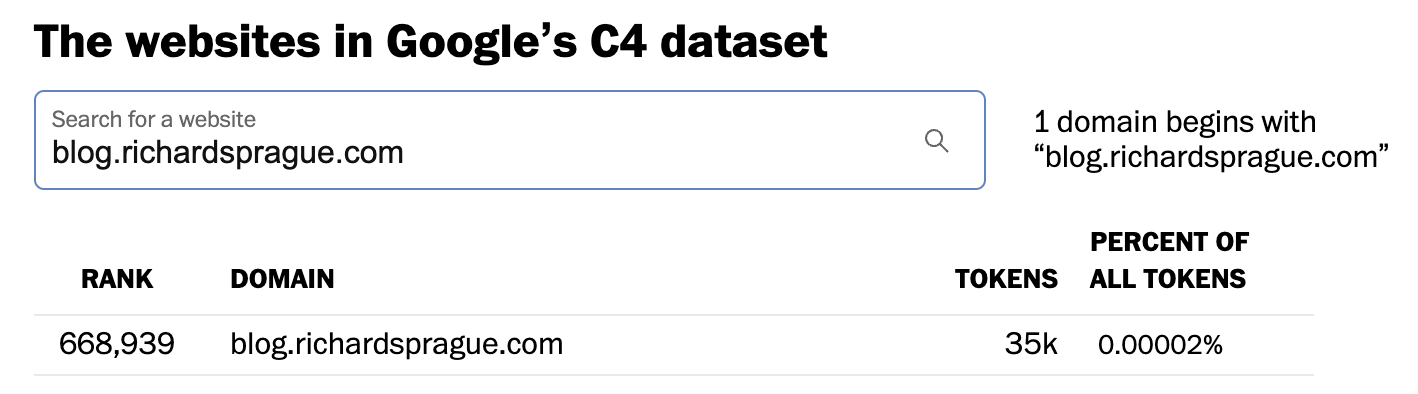

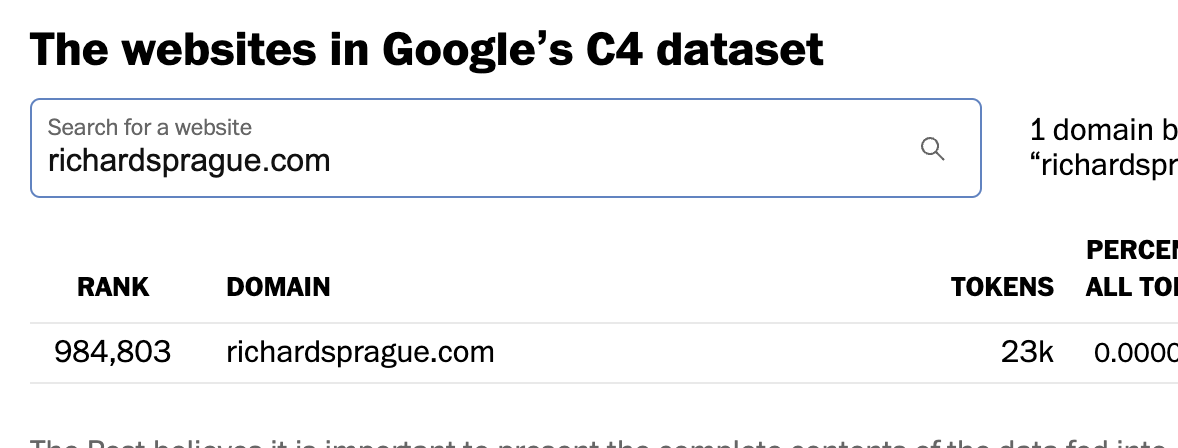

Washington Post has an interactive graphic that digs into more detail. (Also discussed on HN)

Yes, they crawl me:

More Data

There is another dataset, Books3 originally intended to be an open source collection of books.

See Battle over books about books3 (Wired) and another from The Atlantic Revealed: The Authors Whose Pirated Books are Powering Generative AI

a direct download of “the Pile,” a massive cache of training text created by EleutherAI that contains the Books3 dataset, plus material from a variety of other sources: YouTube-video subtitles, documents and transcriptions from the European Parliament, English Wikipedia, emails sent and received by Enron Corporation employees before its 2001 collapse, and a lot more

The Pile is defined and described in this 2020 paper at arXiv.

See more details on HN, including direct links to the latest downloads.

Peter Schoppert writes much more about the copyright issues involved in The books used to train LLMs

Organizing the data

At the core of a transformer model is the idea that many of the intellectual tasks we humans do involves taking one sequence of tokens – words, numbers, programming instructions, etc. – and converting them into another sequence. Translation from one language to another is the classic case, but the insight at the heart of ChatGPT is that question-answering is another example. My question is a sequence of words and symbols like punctuation or numbers. If you append my question to, say, all the words in that huge OpenAI dataset, then you can “answer” my question by rearranging it along with some of the words in the dataset.

The technique of rearranging one sequence into another is called Seq2Seq*.

References and More Details

Dodge et al. (2021)

Dodge, J., Sap, M., Marasović, A., Agnew, W., Ilharco, G., Groeneveld, D., & Gardner, M. (2021). Documenting the English Colossal Clean Crawled Corpus. ArXiv, abs/2104.08758.

An in-depth way to study what data was used to train language models. The results of which suggest that many closed-source models likely didn’t train on popular benchmarks: Oren et al. (2023)

Oren, Y., Meister, N., Chatterji, N., Ladhak, F., & Hashimoto, T. B. (2023). Proving Test Set Contamination in Black Box Language Models (arXiv:2310.17623). arXiv. http://arxiv.org/abs/2310.17623

we audit five popular publicly accessible language models for test set contamination and find little evidence for pervasive contamination.

The Mathematics of Training LLMs — with Quentin Anthony of Eleuther AI

deep dive into the viral Transformers Math 101 article and high-performance distributed training for Transformers-based architectures.

An observation on Generalization: 1 hr talk by Ilya Sutskever, OpenAI’s Chief scientist. He’s previously talked about how compression may be all you need for intelligence. In this lecture, he builds on the ideas of Kolmogorov complexity and how neural networks are implicitly seeking for simplicity in the representations that they learn. He provides a clarity of thought that is rarely seen in the industry around generalization of these novel systems.

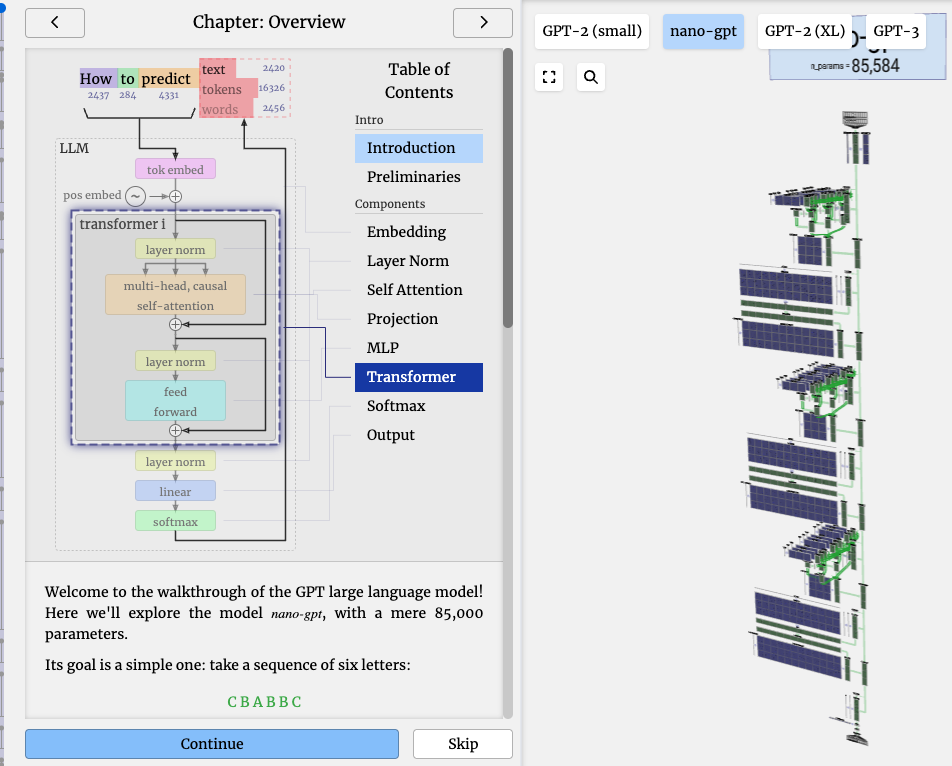

Brendan Bycroft wrote a well-done step-by-step visualization of how an LLM works

Welcome to the walkthrough of the GPT large language model! Here we’ll explore the model nano-gpt, with a mere 85,000 parameters.

Its goal is a simple one: take a sequence of six letters:

C B A B B C and sort them in alphabetical order, i.e. to “ABBBCC”.